Technion & UCLA Researchers Identify a Structured Code used for Representing Speech Movements by Neurons in the Human Brain

The researchers were able to directly decode vowels from the neural activity which leads to their articulation – a finding which could allow individuals who are completely paralyzed to “speak” to the people around them through a direct brain-computer interface

The researchers were able to directly decode vowels from the neural activity which leads to their articulation – a finding which could allow individuals who are completely paralyzed to “speak” to the people around them through a direct brain-computer interface

“There are diseases in which the patient’s entire body is paralyzed, he is effectively ‘locked in’ (locked-in syndrome) and is unable to communicate with the environment, but his mind still functions”, explains Prof. Shoham, Head of the Neural Interface Engineering Laboratory in the Technion Department of Biomedical Engineering. “Our long term goal is to restore these patients’ ability to speak using systems that will include implanting electrodes in their brains, decoding the neural activity that encodes speech, and sounding artificial speech sounds. For this purpose, we wanted to first understand how the information about the articulated syllable is encoded in the electrical activity of an individual brain neuron and of a neuron population. In our experiments we identified cell populations that distinctly participate in the representation. For example, cells we registered in an area in the medial frontal lobe that includes the anterior cingulate cortex, surprised us in the manner in which they ‘sharply’ represented certain vowels but not others, even though the area is not necessarily known as having a major role in the speech generation process”.

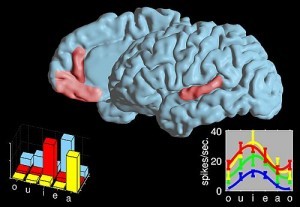

The experiments were conducted in the UCLA Medical Center with the participation of epilepsy patients, in whose brain Prof. Fried and his team implanted depth electrodes. The objective of the implant is to locate the epileptic focus, which is the area in the brain where epileptic seizures begin. After the surgery, the patients were hospitalized for a week or two with the electrodes in their brain, and waited for the occurrence of spontaneous seizures. During that time, Dr. Tankus, who was a post-doctoral fellow at UCLA and is now a researcher at the Technion, conducted experiments in which he asked the patients to articulate vowels as well as syllables comprising a consonant and a vowel, and recorded the resulting neuronal activity in their brain. The researchers discovered two neuron populations that encode the information about the vowel articulated in an entirely different way. In the first population, identified in the medial frontal lobe, each neuron encodes only one or two vowels by changing its firing rate, but does not change its activity when other vowels are articulated. However, in the second population, located in the superior temporal gyrus, each neuron reacts to all vowels tested, but the cell’s reaction strength changes gradually between vowels. Moreover, the researchers were able to deduce a mathematical arrangement of the manner in which the vowels are represented in the brain, showing it to match the phonetic vowel trapezoid, which is built according to the location of the highest point of the tongue during articulation. Thus, the researchers succeeded in connecting the brain representation with the anatomy and physiology of vowel articulation.

As aforesaid, understanding brain representation of speech generation constitutes also a significant step on the road to decoding cellular activity using a computer, as Dr. Tankus explains: “we have developed a new algorithm that improved greatly the ability to identify from brain activity which syllable was articulated, and this algorithm has allowed us to obtain very high identification rates. Based on the present findings, we are currently conducting experiments toward the creation of a brain-machine interface that will restore people’s speech faculties”.

Above: The two language areas where cell reactions during speech were researched. The graphs present a selective code for vowels in an area in the frontal lobe and a non-selective code in an area in the temporal lobe (each color represents a neuron).

The image highlights the brain areas where neurons with a structured code for speech generation were found. In the frontal region neurons were highly vowel-selective while in the temporal region (on the right) the code was broad and non-selective

Credit: Ariel Tankus