Deep Learning

Researchers from the Technion Computer Science Department introduce unprecedented theoretical foundation to one of the hottest scientific fields today – deep learning

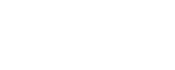

In a recent article, Prof. Elad and his PhD students, Vardan Papyan and Yaniv Romano introduce a broad theory explaining many of the important aspects of multi-layered neural networks, which are the essence of deep learning.

In a recent article, Prof. Elad and his PhD students, Vardan Papyan and Yaniv Romano introduce a broad theory explaining many of the important aspects of multi-layered neural networks, which are the essence of deep learning.

Initial seed ideas in the 1940s and 1950s, elementary applications in the 1960s, promising signs in the 1980s, a massive decline and stagnation in the 1990s, followed by dramatic awakening development in the past decade. This, in a nutshell, is the story of one of the hottest scientific fields in data sciences – neural networks, and more specifically, deep learning.

Deep learning fascinates major companies including Google, Facebook, Microsoft, LinkedIn, IBM and Mobileye. According to Technion Professor Michael Elad, this area came to life in the past decade following a series of impressive breakthroughs. However, “while empirical research charged full speed ahead and made surprising achievements, the required theoretical analysis trailed behind and has not, until now, managed to catch up with the rapid development in the field. Now I am happy to announce that we have highly significant results in this area that close this gap.”

In a recent article, Prof. Elad and his PhD students, Vardan Papyan and Yaniv Romano, present, for the first time, a broad theory that explains many of the important aspects of multi-layered neural networks, which are the essence of deep learning.

“One could say that up to now, we have been working with a black box called a neural network,” Elad explains. “This box has been serving us very well , but no one was able to identify the reasons and conditions for its success. In our study, we managed to open it up, analyze it and provide a theoretical explanation for the origins of its success. Now, armed with this new perspective, we can answer fundamental questions such as failure modes in this system and ways to overcome them. We believe that the proposed analysis will lead to major breakthroughs in the coming few years.”

But first a brief background explanation.

*(Multi-layered) Neural Networks

*(Multi-layered) Neural Networks

Convolutional neural networks, and more broadly, multi-layered neural networks, pose an engineering approach that provides the computer with a potential for learning that brings it close to human reasoning. Ray Kurzweil, Google’s chief futurist in this field, believes that by 2029 computerized systems will be able to demonstrate not only impressive cognitive abilities, but even genuine emotional intelligence, such as understanding a sense of humor and human emotions. Deloitte has reported that the field of deep learning is growing at a dizzying rate of 25% per year, and is expected to become a 43 billion USD industry per year by 2020.

Neural networks, mainly those with a feed-forward structure that are currently at the forefront of research in the fields of machine learning and artificial intelligence, are systems that perform rapid, efficient and accurate cataloging of data. To some extent, these artificial systems are reminiscent of the human brain and, like the brain, they are made up of layers of neurons interconnected by synapses. The first layer of the network receives the input and “filters” it for the second, deeper layer, which performs additional filtering, and so on and so forth. Thus the information is diffused through a deep and intricate artificial network, at the end of which the desired output is obtained.

If, for example, the task is to identify faces, the first layers will take the initial information and extract basic features such as the boundaries between the different areas in the face image; the next layers will identify more specific elements such as eyebrows, pupils and eyelids; while the deeper layers of the network will identify more complex parts of the face, such as the eyes; the end result will be the identification of a particular face, i.e., of a specific person. “Obviously the process is far more complex, but this is the principle: each layer is a sort of filter that transmits processed information to the next layer at an increasing level of abstraction. In this context, the term ‘deep learning’ refers to the multiple layers in the neural network, a structure that has been empirically found to be especially effective for identification tasks.

The hierarchical structure of these networks enables them to analyze complex information, identify patterns in this information, categorize it, and more. Their greatness lies in the fact that they can learn from examples, i.e. if we feed them millions of tagged images of people, cats, dogs and trees, the network can learn to identify the various categories in new images, and do so at unprecedented levels of accuracy, in comparison with previous approaches in machine learning.”

The first artificial neural network was presented by McCulloch and Pitts in 1943. In the 1960s, Frank Rosenblatt from Cornell University introduced the first learning algorithm for which convergence could be proven. In the 1980s, important empirical achievements were added to this development.

It was clear to all the scientists engaged in this field in those years that there is a great potential here, but they were utterly discouraged by the many failures and the field went into a long period of hibernation. Then, less than a decade ago, there was a great revival. Why? “Because of the dramatic surge in computing capabilities, making it possible to run more daring algorithms on far more data. Suddenly, these networks succeeded in highly complex tasks: identifying handwritten digits (with accuracy of 99% and above), identifying emotions such as sadness, humor and anger in a given text and more.” One of the key figures in this revival was Yann LeCun, a professor from NYU who insisted on studying these networks, even at times when the task seemed hopeless. Prof. LeCun, together with Prof. Geoffrey Hinton and Prof. Yoshua Bengio from Canada, are the founding fathers of this revolutionary technology.

Real Time Translation

In November 2012, Rick Rashid, director of research at Microsoft, introduced the simultaneous translation system developed by the company on the basis of deep learning. At a lecture in China, Rashid spoke in English and his words underwent a computerized process of translation, so that the Chinese audience would hear the lecture in their own language in real time. The mistakes in the process were few – one mistake per 14 words on average. This is in comparison with a rate of 1:4, which was considered acceptable and even successful several years earlier. This translation process is used today by Skype, among others, and in Microsoft’s various products.

Beating the World Champion

Google did not sit idly by. It recruited the best minds in the field, including the aforementioned Geoffrey Hinton, and has actually become one of the leading research centers in this regard. The Google Brain project was established on a system of unprecedented size and power, based on 16,000 computer cores producing around 100 trillion inter-neuronal interactions. This project, which was established for the purpose of image content analysis, quickly spread to the rest of the technologies used by Google. Google’s AlphaGo system, which is based on a convolutional neural network, managed to beat the world champion at the game of Go. The young Facebook, with the help of the aforementioned Yann LeCun, has already made significant inroads into the field of deep learning, with extremely impressive achievements such as identifying people in photos. The objective, according to Facebook CEO Mark Zuckerberg, is to create computerized systems that will be superior to human beings in terms of vision, hearing, language and thinking.

Today, no one doubts that deep learning is a dramatic revolution when it comes to speed of calculation and processing huge amounts of data with a high level of accuracy. Moreover, the applications of this revolution are already being used in a huge variety of areas: encryption, intelligence, autonomous vehicles (Mobileye’s solution is based on this technology), object recognition in stills and video, speech recognition and more.

Back to the Foundations

Surprisingly enough, however, the great progress described above has not included a basic theoretical understanding that explains the source of these networks’ effectiveness. Theory, as in many other cases in the history of technology, has lagged behind practice.

This is where Prof. Elad’s group enters the picture, with a new article that presents a basic and in-depth theoretical explanation for deep learning. The people responsible for the discovery are Prof. Elad and his three doctoral students: Vardan Papyan, Jeremias Sulam and Yaniv Romano. Surprisingly, this team came to this field almost by accident, from research in a different arena: sparse representations. Sparse representations are a universal information model that describes data as molecules formed from the combination of a small number of atoms (hence the term ‘sparse’). This model has been tremendously successful over the past two decades and has led to significant breakthroughs in signal and image processing, machine learning, and other fields.

So, how does this model relates to deep neural networks? It turns out that the principle of sparseness continues to play a major role, and even more so in this case. “Simply put, in our study we propose a hierarchical mathematical model for the representation of the treated information, whereby atoms are connected to each other and form molecules, just as before, except that now the assembly process continues: molecules form cells, cells form tissues, which in turn form organs and, in the end, the complete body – a body of information – is formed. The neural network’s job is to break up the complete information into its components in order to understand the data and its origin.

Papyan and Sulam created the initial infrastructure in two articles completed in June 2016, while in the follow-up work Papyan and Romano diverted the discussion to deep learning and neural networks. The final article, as noted, puts forward the theoretical infrastructure that explains the operating principles of deep neural networks and their success in learning tasks.

“We can illustrate the significance of our discovery using an analogy to the world of physics,” says Prof. Elad. “Imagine an astrophysicist who monitors the movement of celestial objects in search of the trajectories of stars. To explain these trajectories, and even predict them, he will define a specific mathematical model. In order for the model to be in line with reality, he will find that it is necessary to add complementary elements to it – black holes and antimatter, which will be investigated later using experimental tools.

“We took the same path: We started from the real scenario of data being processed by a multi-layered neural network, and formulated a mathematical model for the data to be processed. This model enabled us to show that one possible way to decompose the data into its building blocks is the feed-forward neural network, but this could now be accompanied by an accurate prediction of its performance. Here, however, and unlike the astrophysical analogy, we can not only analyze and predict reality but also improve the studied systems, since they are under our control.”

Prof. Elad’s emphasizes that “our expertise in this context is related to handling signals and images, but the theoretical paradigm that we present in the article could be relevant to any field, from cyberspace to autonomous navigation, from deciphering emotion in a text to speech recognition. The field of deep learning has made huge advances even without us, but the theoretical infrastructure that we are providing here closes much of the enormous gap between theory and practice that existed in this field, and I have no doubt that our work will provide a huge boost to the practical aspects of deep learning.”

About the Doctoral Students

When Vardan Papyan completed his master’s degree, supervised by Prof. Elad, he didn’t intend to continue studying towards a PhD. However, during the final MSc exam, the examiners determined that his work was almost a complete doctoral thesis. After consulting with the Dean of the Computer Science Faculty and the Dean of the Technion’s Graduate School, it was decided to admit him to the direct Ph.D. track with the understanding that he would complete his doctorate within less than a year.

Yaniv Romano, a student in the direct Ph.D. track, has already won several prestigious awards. In the summer of 2015, he spent several months as an intern at Google Mountain View, USA, and left an unforgettable impression with his original solution to the single-image super-resolution problem, which is being considered for several of Google’s products.